Around 7 Meters is more fun

2023

joystick, display, customized software, camera and metal, machine learning algorithms

Dimension variable (minimum size: 7 meter )

“Around 7 meters is more fun”創造一個特殊的情境,藉由情境與限制,去轉變溝通方式。參與者為了理解展示的視覺效果,不僅需要仰賴觀察者的描述、描繪和表達,更需要這些來傳達意義。這種互動促進了參與者和觀察者之間更緊密的合作,彌合了所見和所未見之間的差距,重新定義了人類感知的多面性。其中也運用機器學習的方式,去限制操作參與者無法看見其自己創造的結果,而觀察者也無法運用相機紀錄其結果,這兩種規則去賦予軟體表現接近於人類的情感邏輯,藉由此方法,去製造特殊的感知與互動經驗,重新思考可見和不可見之間的關係。

Description of the project

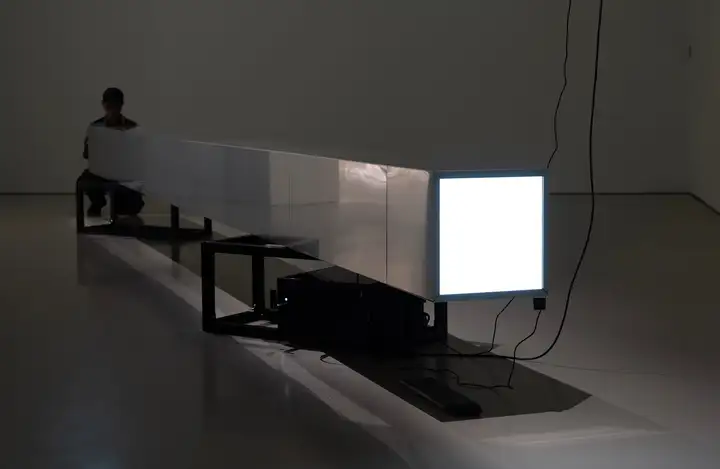

Around 7 Meters is More Fun displays the image trajectory drawn in real time with a joystick onto a remote screen. While observers can see the images generated on a screen situated on the opposite end of a 7 meter long structure, the participants who manipulate the joystick in the other end are deprived of this vision. The resulting images also cannot be captured or recorded. Consequently, participants must rely on the descriptions or expressions of the observers to conjure an unseen scene.

The work creates a unique context that transforms communication by exploiting on-site elements and visuality constraints. In order to understand the visual effects generated, participants rely not only on the observer's descriptions, depictions, and expressions but also need these to convey meaning. This interaction triggers a closer collaboration between participants and observers, bridging the gap between the seen and the unseen and redefining the multiplicity of human perception. Machine learning tools combine with prompts, objects and motion recognition software, restricting participants from seeing the output of their own interventions, and depriving observers from using their cameras to record these results. These two rules provide the software with a closer approximation to human emotional and social logic, creating a unique experience of perception and interaction from which to rethink the relationship between the visible and the invisible.

In an environment saturated with internet information, where technology and media have significantly shortened attention spans, the way humans receive sensory stimuli has changed similarly on the informational level. Communication and comprehension are markedly different from the past. Around 7 Meters is More Fun enables observers or audiences to become witnesses, translating visual experiences into language and other non-visual forms of communication.

This work involves two distinct machine learning models and algorithms. ReID (Person Re-Identification) aims to assist in remembering who is operating the joystick. When the operator approaches the screen, the system will erase the image. The system is able to recognise the individual for a certain period of time, even after he/she has left. GroundingDino serves in detecting image/video capture devices.

Short project summary

Around 7 Meters is More Fun displays the image trajectory generated by participants manipulating a joystick onto a remote screen. Only observers can see the images that appear on the screen; neither the participants themselves can see them nor can the images be captured or recorded. Consequently, participants must rely on the descriptions or expressions of the observers to conjure an unseen scene. Here, I create a unique interactive situation that compels people to reconsider the distinctions and connections between the seen and the unseen.

Photo by Eslite gallery

鄭先喻個展[.user ]|2023 9/16 - 10/14|誠品畫廊 from ESLITE GALLERY on Vimeo.